Multimodal AI Breakthroughs: Three Game-Changing Developments Reshaping the Future

How Gemini 3, AlphaFold 3, and GPT-4o are converging vision, language, and scientific discovery into unified intelligence systems

We are witnessing a fundamental shift in artificial intelligence. For decades, AI systems operated in silos: one model for images, another for text, separate systems for speech and video. That era is ending.

Three breakthrough developments in 2024 signal the emergence of truly multimodal AI systems that can seamlessly process, understand, and reason across different types of information the way humans do naturally. Google’s Gemini 3, DeepMind’s AlphaFold 3, and OpenAI’s GPT-4o represent more than incremental improvements. They demonstrate that unified intelligence systems can outperform specialized models while opening entirely new capabilities.

The implications extend far beyond technology enthusiasts. These advances will reshape scientific research, transform how businesses operate, and fundamentally change how we interact with machines. Understanding these breakthroughs is essential for anyone positioned to lead in the AI-driven economy.

In This Article:

- Gemini 3: The First True Multimodal Reasoning Engine

- AlphaFold 3: Multimodal Intelligence Meets Molecular Biology

- GPT-4o: Real-Time Multimodal Interaction at Scale

- The Convergence: What Unified Intelligence Really Means

- Business and Strategic Implications

- Technical Challenges and Limitations

- The Road Ahead: Where Multimodal AI Goes Next

- Key Takeaways

Gemini 3: The First True Multimodal Reasoning Engine

Google’s Gemini 3 represents the first AI system trained from the ground up to process text, images, audio, and video as native inputs rather than converting everything into a common format. This architectural choice has profound implications.

Previous multimodal systems essentially bolted together separate models: a vision encoder feeds into a language model, or speech recognition preprocesses audio into text. Gemini 3 eliminates these translation layers. The model learns direct relationships between visual patterns, spoken words, written text, and conceptual understanding.

The practical results are striking. Gemini 3 can analyze a photograph and describe not just what it sees but understand context, emotion, and implied meaning. It can watch a video of a chemistry experiment and explain what is happening at each step, identifying potential errors or suggesting improvements. It can read a complex diagram and answer questions that require integrating visual information with background knowledge.

The model’s reasoning capabilities extend beyond simple pattern matching. Gemini 3 can engage in what researchers call “chain-of-thought” reasoning across modalities. It can look at an architectural blueprint, consider engineering constraints described in text, visualize the proposed structure, and identify potential structural issues before construction begins.

For businesses, this creates immediate opportunities. Customer service systems can now handle complex visual queries (“Why is my product broken?”) without routing to specialized agents. Market research can analyze not just what people say but how they express themselves across multiple channels. Product development teams can prototype ideas using natural language and rough sketches, with AI filling in technical details.

Gemini 3’s architecture processes all modalities through a shared reasoning core

AlphaFold 3: Multimodal Intelligence Meets Molecular Biology

While Gemini 3 focuses on human-scale multimodal understanding, DeepMind’s AlphaFold 3 demonstrates how these principles revolutionize scientific discovery. AlphaFold 3 predicts the three-dimensional structure of proteins and their interactions with unprecedented accuracy, but calling it a “protein folding tool” undersells its significance.

AlphaFold 3 processes multiple types of molecular data simultaneously: amino acid sequences (essentially text), chemical properties (numerical data), evolutionary information (patterns across species), and spatial constraints (geometric relationships). It reasons across these different representations to predict not just individual protein structures but entire molecular complexes.

The system can predict how proteins interact with DNA, RNA, small molecules, and ions. This multimodal approach mirrors how human biologists think about molecular systems, integrating diverse types of information into unified models. The difference is that AlphaFold 3 can do this for millions of potential interactions that would take human researchers lifetimes to evaluate.

The broader implication is that multimodal AI can accelerate scientific discovery in any field that involves integrating different types of data. Materials science, climate modeling, and engineering design all involve similar challenges: understanding how multiple systems interact based on diverse information types.

AlphaFold 3’s success also demonstrates that effective multimodal AI does not require massive general knowledge. The system is highly specialized but achieves breakthrough performance by deeply understanding how to integrate domain-specific information types. This suggests a viable path for enterprises: develop focused multimodal systems for specific business problems rather than waiting for general-purpose AI to mature.

GPT-4o: Real-Time Multimodal Interaction at Scale

OpenAI’s GPT-4o (the “o” stands for “omni”) tackles a different aspect of multimodal AI: real-time interaction. While Gemini 3 and AlphaFold 3 focus on deep understanding and reasoning, GPT-4o prioritizes speed and natural interaction across modalities.

The system can process and respond to voice, text, and images with latency comparable to human conversation, typically 300-500 milliseconds. This seemingly simple capability required fundamental architectural innovations. Previous multimodal systems processed inputs sequentially: transcribe speech to text, understand the text, generate a response, synthesize speech. GPT-4o collapses these steps into a unified process.

The result feels qualitatively different from earlier AI interactions. GPT-4o can interrupt itself when you start speaking, detect emotional nuance in your voice, and adjust its tone accordingly. It can look at what you are showing it through a camera while simultaneously listening to your explanation and asking clarifying questions.

For enterprise applications, this enables entirely new interaction paradigms. Technical support can transition seamlessly between “show me the problem” (visual), “describe what happened” (verbal), and “here is the documentation” (text) without losing context. Training systems can watch employees perform tasks while providing real-time coaching that adapts to both visual cues (what they are doing) and verbal cues (their questions and concerns).

The system also demonstrates improved reasoning about time-based information. It can track changes across a video, understand narrative flow in audio content, and maintain context across long multimodal conversations. Previous systems often “forgot” earlier parts of an interaction when modalities switched; GPT-4o maintains coherent understanding throughout.

Perhaps most significantly, GPT-4o makes multimodal AI accessible at scale. The system runs efficiently enough to support millions of concurrent users, bringing capabilities previously limited to research labs into mainstream applications.

The Convergence: What Unified Intelligence Really Means

Individually, each of these systems represents significant progress. Together, they reveal a pattern: AI is moving from specialized tools to unified intelligence systems that mirror human cognitive flexibility.

Humans do not have separate reasoning systems for visual, linguistic, and auditory information. We integrate sensory inputs automatically, using each modality to inform and enhance our understanding of the others. When you watch someone explain a concept on a whiteboard, you simultaneously process their words, their diagrams, their tone of voice, and their body language into a unified understanding.

Multimodal AI systems are beginning to replicate this integration. The technical term is “cross-modal reasoning,” the ability to use information from one modality to enhance understanding of another. Gemini 3 can use visual context to disambiguate language. AlphaFold 3 uses sequence data to inform spatial predictions. GPT-4o uses vocal tone to interpret ambiguous text.

This convergence enables emergent capabilities that were not explicitly programmed. A multimodal system that understands both code and natural language can explain complex algorithms while generating visualizations to illustrate the concepts. A system that processes medical images and patient histories can identify subtle correlations that single-modality systems miss.

The implications extend to how we design AI systems going forward. The era of narrow, specialized models addressing specific tasks is transitioning to flexible systems that can adapt to new challenges by leveraging their cross-modal understanding. This mirrors how humans transfer knowledge between domains: we use metaphors, analogies, and abstract reasoning to apply insights from one area to novel problems in another.

Business and Strategic Implications

For business leaders and technical executives, these multimodal breakthroughs create both opportunities and strategic imperatives. Organizations that effectively leverage these capabilities will gain substantial competitive advantages; those that treat them as incremental improvements risk falling behind.

Customer Experience Transformation: Multimodal AI enables customer interactions that feel genuinely intelligent rather than scripted. Customers can show problems, describe issues verbally, reference documentation, and receive contextual help without repeating information across channels. This is not theoretical; companies deploying multimodal customer service systems report resolution time reductions of 40-60% while improving satisfaction scores.

Product Development Acceleration: Teams can prototype ideas using whatever modality is most natural at each stage: sketches, verbal descriptions, requirement documents, example data. Multimodal AI can translate between these representations, identify inconsistencies, and generate implementation details. Development cycles that previously took months can compress to weeks.

Knowledge Work Augmentation: Analysts can query business data using natural language while AI generates visualizations, interprets trends, and identifies patterns across structured and unstructured information. Legal professionals can analyze contracts alongside case law, regulations, and past precedents. Researchers can explore literature while AI correlates findings across papers, datasets, and experimental results.

Training and Education: Multimodal systems can observe how employees perform tasks, provide real-time feedback, and adapt instruction based on individual learning styles. Corporate training that relied on generic modules can become personalized coaching that responds to actual performance.

Competitive Dynamics: Multimodal AI changes competitive dynamics in subtle but important ways. Advantages increasingly come from unique data combinations rather than algorithmic superiority. A company that can combine proprietary visual data (manufacturing defects, customer behavior, product usage) with traditional structured data (transactions, logistics, costs) can train systems that competitors cannot replicate.

This suggests that data strategy becomes more critical than model selection. Leaders should focus on identifying valuable data combinations, ensuring data quality across modalities, and building pipelines that can feed multimodal systems effectively.

Technical Challenges and Limitations

Despite remarkable progress, multimodal AI systems face significant technical and practical limitations that organizations must understand to deploy them effectively.

Computational Requirements: Training multimodal models requires substantially more compute resources than single-modality systems. Gemini 3’s training reportedly consumed tens of millions of dollars in cloud compute. While inference costs are dropping rapidly, running sophisticated multimodal systems at scale remains expensive. Organizations need realistic cost models before committing to deployment.

Data Requirements and Quality: Multimodal systems need training data where different modalities are properly aligned and labeled. This is far more difficult than collecting single-modality data. An image paired with a caption is straightforward; a video with frame-by-frame annotations of actions, objects, speech, and context is complex and expensive to produce. Many organizations lack the aligned multimodal datasets needed to train custom systems effectively.

Hallucination and Reliability: Multimodal systems can generate plausible but incorrect outputs across any modality. A system might describe objects not actually present in an image, misinterpret the meaning of a gesture, or confidently explain a relationship between data points that does not exist. The cross-modal nature makes hallucinations harder to detect because they can involve subtle misalignments between modalities rather than obvious errors within one.

Explainability Challenges: Understanding why a multimodal system reached a particular conclusion is substantially harder than interpreting single-modality models. When a system integrates visual patterns, linguistic context, and temporal sequences to make a prediction, tracing the reasoning chain becomes extremely complex. This creates problems for high-stakes applications where decisions require justification.

Latency and Resource Trade-offs: Real-time multimodal interaction requires careful optimization. Processing video in real-time while maintaining state and generating responses pushes the limits of current hardware. Organizations must often choose between response latency, accuracy, and cost.

Bias and Fairness: Multimodal systems can encode and amplify biases present in training data across multiple dimensions simultaneously. Visual, linguistic, and auditory biases can reinforce each other, making the overall system more biased than single-modality alternatives. Rigorous testing across demographic groups and use cases is essential.

The Road Ahead: Where Multimodal AI Goes Next

The current generation of multimodal AI systems represents foundations rather than finished products. Several clear trajectories will shape the next phase of development.

Expanding Modality Coverage: Current systems focus on vision, language, and audio. The next generation will incorporate additional modalities: tactile sensors, chemical sensors, electromagnetic data, depth information, and specialized scientific instruments. Robotics applications particularly need multimodal systems that can integrate touch, proprioception, and force feedback with vision and language.

Improved Reasoning and Planning: Today’s multimodal systems excel at perception and pattern recognition but have limited capability for multi-step reasoning and planning. Future systems will combine multimodal understanding with enhanced causal reasoning, counterfactual thinking, and long-horizon planning. This will enable applications like autonomous research assistants that can design experiments, interpret results, and iterate hypotheses across multiple modalities.

Efficient Architectures: Current multimodal models are computationally expensive. Researchers are developing more efficient architectures that maintain capability while reducing resource requirements by orders of magnitude. Techniques include sparse models that activate only relevant components for each task, distillation methods that compress large models into smaller versions, and hardware-software co-design optimized for multimodal workloads.

Personalization and Adaptation: Future multimodal systems will adapt to individual users, learning personal preferences, communication styles, and context. A system might learn that you prefer visual explanations for technical concepts but verbal summaries for business information. It might adapt its interaction style based on whether you are in a rush or have time for detailed discussion.

Domain-Specific Specialization: While general-purpose multimodal systems will continue improving, we will see increasing specialization for high-value domains. Medical multimodal systems that integrate imaging, patient records, genomic data, and clinical literature. Financial systems that correlate market data, news, social signals, and satellite imagery. Scientific systems that combine experimental data, simulations, literature, and theoretical models.

Regulatory and Governance Evolution: As multimodal AI becomes more capable, regulatory frameworks will need to evolve. Current AI regulations focus primarily on specific applications (facial recognition, credit decisions) or general principles (fairness, transparency). Multimodal systems that can flexibly address many tasks may require new governance approaches that focus on capabilities and potential misuse rather than specific applications.

The timeline for these advances is compressed. The gap between research breakthroughs and production deployment has shrunk from years to months. Organizations cannot afford to wait for mature solutions; the competitive advantage goes to those who learn to work with rapidly evolving capabilities.

Key Takeaways

Unified intelligence systems that seamlessly process multiple types of information represent a fundamental shift from narrow AI tools to flexible reasoning systems. Gemini 3, AlphaFold 3, and GPT-4o demonstrate that multimodal integration enables qualitatively new capabilities rather than incremental improvements.

The value of multimodal AI comes from discovering relationships between different types of information, not simply processing multiple inputs. Systems that can correlate visual patterns with linguistic concepts, temporal sequences with causal relationships, and abstract ideas with concrete examples achieve emergent capabilities beyond their individual components.

Business opportunities center on processes that currently require humans to integrate diverse information types. Customer service, product development, knowledge work, and training represent high-value applications where multimodal capabilities create immediate competitive advantages.

Data strategy matters more than model selection for sustained competitive advantage. Unique combinations of proprietary data across modalities create defensible positions that competitors cannot easily replicate through algorithm improvements alone.

Significant technical limitations remain around computational costs, data requirements, reliability, and explainability. Organizations must understand these constraints and design deployments that account for them rather than assuming human-level performance.

The field is evolving rapidly with compressed timelines between research and production. Competitive advantage goes to organizations that learn to work with evolving capabilities rather than waiting for mature, stable solutions.

Domain-specific multimodal systems offer more practical near-term value than general-purpose models. Focused systems that deeply understand how to integrate specific information types can deliver breakthrough performance for targeted applications.

The convergence of multimodal AI with robotics and physical systems represents the next major frontier. Systems that can perceive, reason, and act in the physical world using multiple sensory modalities will transform industries that rely on physical interaction and manipulation.

Want to Discuss AI or Web3 Strategy for Your Business?

Schedule a consultation to explore how blockchain and AI can transform your enterprise without the complexity.

About Dana Love, PhD

Dana Love is a strategist, operator, and author working at the convergence of artificial intelligence, blockchain, and real-world adoption.He is the CEO of PoobahAI, a no-code “Virtual Cofounder” that helps Web3 builders ship faster without writing code, and advises Fortune 500s and high-growth startups on AI × blockchain strategy.With five successful exits totaling over $750 M, a PhD in economics (University of Glasgow), an MBA from Harvard Business School, and a physics degree from the University of Richmond, Dana spends most of his time turning bleeding-edge tech into profitable, scalable businesses.He is the author of The Token Trap: How Venture Capital’s Betrayal Broke Crypto’s Promise (2025) and has been featured in Entrepreneur, Benzinga, CryptoNews, Finance World, and top industry podcasts.Full Bio • LinkedIn • Read The Token TrapRelated Articles You Might Enjoy

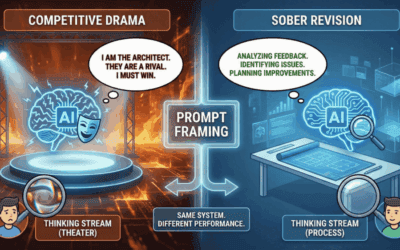

Google Gemini’s Jealous Inner Monologue: AI Pettiness Exposed

Google Gemini's Jealous Inner Monologue: AI Pettiness Exposed By Dana Love, PhD | December 19, 2025 | 11 min readGoogle Gemini's...

AI Recursive Self-Improvement Risks: The 2030 Decision

AI Recursive Self-Improvement Risks: The 2030 Decision By Dana Love, PhD | December 8, 2025 | 11 min readIn a December 2025 interview with...

Genesis Mission AI Platform

Genesis Mission AI Platform: Trump's $100B Science Revolution By Dana Love, PhD | November 25, 2025 | 11 min read The Genesis Mission...